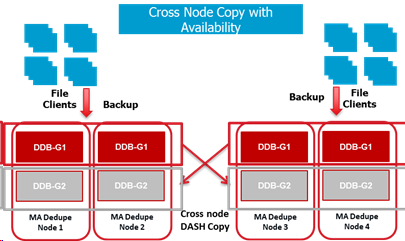

Deduplication Partitioned Extended mode configuration uses multiple MediaAgents (two to four, in a grid) to host multiple individual physical partitions (up to two deduplication databases (DDBs) per MediaAgent) of larger logical DDBs (up to two per grid). This configuration is typically used to increase the amount of FET or BET a single DDB can manage, or to allow extended/alternate retention of data in the primary DDB through a DASH-copy from the primary DDB to the secondary DDB, or to allow cross-site copies of data in a disaster recovery (DR) configuration.

For details on supported platforms, see Building Block Guide - Deduplication System Requirements.

You can use the deduplication partitioned extended mode in the following scenarios:

-

MediaAgent hosting the DDB of primary copy and secondary copy from four different sites.

Both primary and secondary copies data (usually from separate sources) access the same DDB MediaAgent. Usually as part of cross site DASH copy.Long Term Retention.

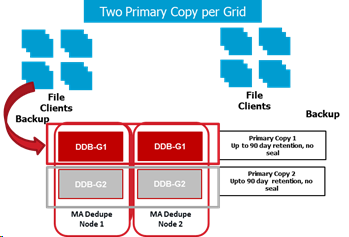

Four DDBs for Primary Copies per Grid

Protection of large amounts of unstructured data with incremental forever strategy.

In this scenario, MediaAgent hosts two DDBs of primary copy with 90 days retention.

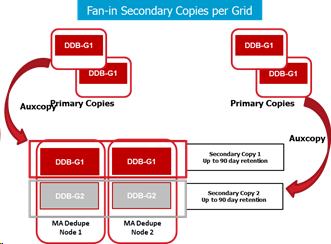

Four DDBs for Secondary Copies per MediaAgent

Fan-in target for secondary copies from two or more DDB MediaAgents managing primary copies.

In this scenario, MediaAgent hosts two DDBs of secondary copies with 90 days retention.

The following table provides the hardware requirements for Extra Large and Large environments for deduplication partitioned extended mode. For Medium, Small and Extra Small environments, partitioned mode is not recommended.

Terms that is used in the following Hardware Requirements:

-

Deduplication Node - MediaAgent hosting the DDB.

-

Grid - The collection of the deduplication nodes.

Important

-

The following hardware requirements are applicable for MediaAgents with deduplication. The requirements do not apply for tape libraries or MediaAgents without deduplication or using third party deduplication applications.

-

The suggested workloads are not software limitations, rather design guidelines for sizing under specific conditions.

-

The TB values are base-2.

-

To achieve the required IOPs, please consult your hardware vendor for the most suitable configuration for your implementation.

-

The index cache disk recommendation is for unstructured data types like files, VMs and granular messages. Structured data types like application, databases and so on need significantly less index cache. The recommendations given are per MediaAgent.

-

It is recommended to use dedicated volumes for index cache disk and DDB disk.

Number of MediaAgents in a Partitioned DDB, Grid Backend Storage, and CPU/RAM

|

Component |

Extra large |

Large |

|---|---|---|

|

Number of MediaAgents in Partitioned DDB |

4 |

4 |

|

Grid Backend Storage2, 3 |

Up to 2000 TB |

Up to 1200TB |

|

CPU/RAM per node |

16 cores, 128 GB (or 16 vCPUs/128 GB) |

12 cores, 64 GB (or 12 vCPUs/64 GB) |

Disk Layout per Node

|

Component |

Extra large |

Large |

|---|---|---|

|

OS or Software Disk |

400 GB SSD class disk |

400 GB usable disk, min 4 spindles 15K RPM or higher OR SSD class disk |

|

Deduplication Database (DDB) Disk 1 per node1 |

2 TB SSD Class Disk/PCIe IO Cards4 2 GB Controller Cache Memory For Linux, the DDB volume must be configured by using the Logical Volume Management (LVM) package. |

1.2 TB SSD Class Disk/PCIe IO Cards4 2 GB Controller Cache Memory For Linux, the DDB volume must be configured by using the Logical Volume Management (LVM) package. |

|

Deduplication Database (DDB) Disk 2 per node1 |

2 TB SSD Class Disk/PCIe IO Cards4 2 GB Controller Cache Memory For Linux, the DDB volume must be configured by using the Logical Volume Management (LVM) package.9 See Building Block Guide - Deduplication Database |

1.2 TB SSD Class Disk/PCIe IO Cards4 2 GB Controller Cache Memory For Linux, the DDB volume must be configured by using the Logical Volume Management (LVM) package.9 See Building Block Guide - Deduplication Database |

|

Suggested IOPS for each DDB Disk per node |

20K dedicated Random IOPs5 |

15K dedicated Random IOPs5 |

|

Index Cache Disk per node1, 7, 8 |

2 TB SSD Class Disk4, 6 |

1 TB SSD Class Disk4 |

Suggested Workloads for Grid

|

Component |

Extra large |

Large |

|---|---|---|

|

400 |

300 |

|

|

Laptop Clients |

Up to 20000 per grid |

Up to 10000 per grid |

|

Front End Terabytes (FET) |

|

|

|

Primary Copy Only (OR) Secondary Copy Only for Grid |

Notes

|

Notes

|

|

Mixed Primary and Secondary Copy for entire Grid |

Primary Copy

|

Primary Copy

|

Supported Targets

|

Component |

Extra large |

Large |

|---|---|---|

|

Tape Drives |

Not Recommended |

Not Recommended |

|

Disk Storage without Commvault Deduplication |

Not Recommended |

Not Recommended |

|

Deduplication Disk Storage |

Up to 2000 TB, direct attached or NAS |

Up to 1200 TB, direct attached or NAS |

|

Third-Party Deduplication Appliances |

Not recommended |

Not Recommended |

|

Cloud Storage |

Yes Primary copy on Disk and secondary copy on Cloud |

Yes Primary copy on Disk and secondary copy on Cloud |

|

Deploying MediaAgent on Cloud / Virtual Environments |

Yes, for AWS or Azure Sizing, see the following guides: |

Yes, for AWS or Azure Sizing, see the following guides: |

Footnotes

-

It is recommended to use dedicated volumes for index cache disk and DDB disk.

-

Maximum size per DDB.

-

Assumes standard retention of up to 90 days. Larger retention will affect FET managed by this configuration, the back end capacity remains the same.

-

SSD class disk indicates PCIe based cards or internal dedicated endurance value drives. We recommend to use MLCs (Multi-Level Cells) class or better SSDs.

-

Recommend dedicated RAID 1 or RAID 10 group.

-

Recommendation for unstructured data types like files, VMs and granular messages. Structured data types like application and databases require considerably less index cache.

-

To improve the indexing performance, it is recommended that you store your index data on a solid-state drive (SSD). The following agents and cases require the best possible indexing performance:

-

Exchange Mailbox Agent

-

Virtual Server Agents

-

NAS filers running NDMP backups

-

Backing up large file servers

-

SharePoint Agents

-

Ensuring maximum performance whenever it is critical

-

-

The index cache directory must be on a local drive. Network drives are not supported.

-

For Linux, host the DDB on LVM volumes. This helps DDB Backups by using LVM software for snapshots. It is recommended to use thin provisioned LV for DDB volumes to get better Query-Insert performance during the DDB backups.

Related Topics

Tuning Performance When Using a Partitioned Deduplication Database